Is OpenAI already the biggest financial adviser?

When OpenAI’s ChatGPT reached 100 million monthly active users in January it became the fastest-growing consumer application in history. The interactive chatbot is powered by the latest technology in AI - large language models (LLMs — check out our white paper if these are new to you) - and has continued to generate lots of excited coverage around the future of AI-based tools. Despite this, it’s difficult to get accurate insight into what people are actually doing with these tools today.

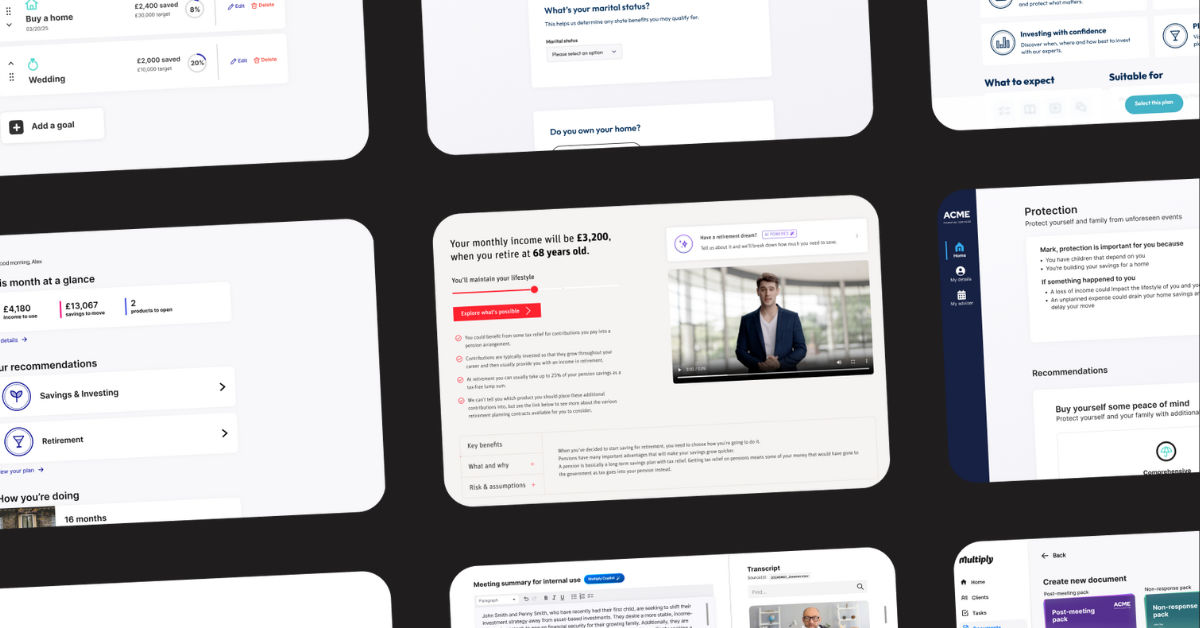

Right now there are relatively few products that incorporate these technologies directly into their consumer offerings (and it seems only a matter of time before there are plenty that do). In their place, customers can use general-purpose AI agents directly from the source. Of these, ChatGPT is the most famous, although the likes of Anthropic’s Claude AI or Google’s Bard are likely to be hot on its heels. So what are the people using these services actually doing?

Undoubtedly a reasonable number of users are people looking to automate the more boring parts of their job sometimes to their downfall. Similarly, teachers are finding that students are increasingly turning in homework and coursework that has been crafted with the assistance of an AI tool — to the extent that some now expect students to complete their work on a service like Google Docs, where the version history of the document can be audited for signs of cheating.

But ChatGPT and its brethren are also acting in many more ways - as personal assistants as private tutors and even as therapists.

These AI agents have a range of techniques to keep them on the straight and narrow, but there are fundamental challenges driven by the nature and the architecture of our current AI technology that make this a challenge (we discuss this a lot more here). The result is that customers are relatively free to ask whatever questions they feel like of AI, and the AI agents are relatively free to answer those questions.

Financial advice questions are no different. AI agents can (and will) recommend investment options. They can break down why you should consider transferring your DB pension. They’ll even write you a simple program to help you project your pension forward to your retirement.

AI agents will already give you a top 3 list of providers for a given financial product, and they can recommend their preferred option. They are, in short, acting as financial advisers.

This isn’t just theoretical. There are now countless blog posts about using ChatGPT to generate financial advice, and social networks like Reddit provide active forums for people to share and refine the best techniques for getting the AI to output the best advice. OpenAI are aware enough of this (and the potential pitfalls) that the AI is clearly trained to defer to human financial advisers as much as possible, often framing any recommendations it does give with caveats about its abilities. Much like the terms and conditions of any service on the internet, however, these are easily skipped over, and the AI can be manipulated such that it will leave them out entirely.

I don’t think the reality of this has hit home: ChatGPT had 173 million users in April 2023, and by June it was receiving 1.6 billion visits a month. When assessed by number of customers, it’s possible that OpenAI is already the biggest financial advice company in the world.

They do all this with limited regulatory oversight, with no transparency and little control over the recommendations that customers receive.

It’s easy to be sceptical of this claim, and my sense of the industry is that most advisers are not that concerned about the rise of AI. Many might say that even if LLMs can spit out answers, they are not giving advice. So, what is advice? Definitions of advice (typically spearheaded by the FCA) tend to involve the requirement that an adviser gives a personalised recommendation, usually for a specific financial product, that is based on their opinion, having taken into account a customer’s personal and financial circumstances. Chat GPT is quite capable of meeting this definition.

And yet, there are still many reasons to dismiss Chat GPT as a real adviser. After all, advice is much more than answering questions. Indeed, good advice is often as much about asking the right questions. It’s about building an understanding of an individual’s needs and wants - perhaps one that they can’t clearly articulate themselves. It’s about drawing on experience and knowledge of the industry to then make recommendations - and then facilitating changes that need to be made. Can an automated chatbot really match up to the human-provided advice experience that is the bedrock of the market today?

One of the remarkable things about the latest technology powering AI agents is that, in many ways, they can replicate these more human skills of advice. Indeed, you can prompt ChatGPT to ask you questions and to take an active role in structuring a financial plan, and it will gladly oblige. You can provide it with your factfind and it will incorporate what it learns about you over the course of a conversation into later recommendations. It’s by no means perfect - it often makes mistakes, especially with numerical calculations (see here for more information), and can be prone to confabulation - but this isn’t really the point I’m trying to make. The reality is that, for many ordinary people, ChatGPT is already capable of providing a service akin to what they might receive from a firm of advisers.

Unfortunately, in practice, there are many complications in delivering advice that mean the service people receive may fall short of the ideal above. Human advice as delivered today is about maximising the number of customers an adviser can serve, to keep costs down. It’s about expecting customers to wade through thick suitability reports where every recommendation is couched in enough risk warnings to make regulators and compliance teams happy. It’s about processing customers with consistency and control at the expense of personalisation, flexibility and speed of response.

These realities are the trade-offs necessary for the current market to work, from both a business and a regulatory perspective, but they can get in the way of what the customer wants. These are disadvantages that AI agents don’t have.

And so, while they might have drawbacks in some areas compared to human advisers, in other areas AI tools have the edge. Most obviously: you can ask questions of ChatGPT any time of day or night. You can develop those questions into full conversations, taking as long as you want and fitting things around the rest of your life. And, for the most part, ChatGPT gives fairly sensible recommendations - it generally outlines some of the key factors that should be taken into account, and makes suggestions for your own use case.

ChatGPT can also give more broad advice. Typically financial advisers will give advice on investments and protection products and they may recommend savings accounts. It is unusual for them to get involved in budgeting, or softer advice (like how to improve your credit score, or how to plan career moves). It’s even more unusual for them to get involved in relationship advice, or personal questions like when you should choose to have children. And yet, these questions have huge financial consequences and are more important to individuals than which fund is going to give the best returns, or have the lowest fees. Customers do not live in the segmented groups that the industry often operates in, and ChatGPT is well-placed to meet their emotional needs.

Finally, the form factor of the advice delivered by AI tools is probably more appealing to a younger demographic. In dismissing the advice capabilities of LLMs — for example, by suggesting that the superior service is one which is delivered in person or over the phone — advisers might risk being cut off from younger generations who have different preferences when interacting with companies and services. It’s hardly a novel observation, but where today’s pensioners would rather pick up a phone and talk to a human being, millennials would generally much rather not having to speak to anyone at all. Indeed, to younger customers, a chatbot might be infinitely preferable to the effort of an in-person meeting, even one held over video chat. Because these customers are still very much short of their peak net wealth, and generally not at the typical life stage where one can justify the expense of human advice, it's easy to ignore their needs. Of course, today’s parents will be looking to save, invest and retire in the years to come.

So I think it’s worth taking Open AI as a serious contender in the advice space. I think regulators and policymakers will need to think carefully about the wider impact of advice being delivered by LLM-powered chatbots. I think advisers should think carefully about how it might already be better serving the needs of their customers (or their future customers). Above all, I think we can expect the rise of new AI technologies to profoundly change the way customers interact with their finances in the years to come.